Yuan Zhang

PhD, Biostatistics

Co-Authors:

David M. Vock, Thomas A. Murray

Advisor:

Dr. Thomas A. Murray

Educational Objectives:

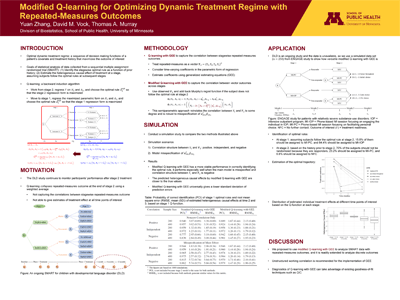

Identify the optimal dynamic treatment regime in a sequential multiple assignment randomized trial with repeated-measures outcomes at each stage of treatment using a modified statistical learning method. Compare our proposed method to existing standard Q-learning.

Keywords:

Dynamic treatment regime, Repeated measures outcomes

Abstract

Dynamic treatment regimes (DTRs) are of increasing interest in clinical trials and personalized medicine because they allow tailoring decision making based on a patient’s treatment and covariate history. In some sequential multiple assignment randomized trials (SMARTs) for children with developmental language disorder (DLD), investigators monitor a patient’s performance by collecting repeated-measures outcomes at each stage of randomization as well as after the treatment period. Standard Q-learning with linear regression as Q-functions is widely implemented to identify the optimal DTR, but fails to provide point estimates of average treatment effect (ATE) at every time point of interest. Moreover, Q-learning in general is susceptible to misspecification of outcome model. To address these problems, we propose a modified version of Q-learning with a generalized estimating equation (GEE) as Q-function. Simulation studies demonstrate that the proposed method performs well in identifying the optimal DTR and is also robust to model misspecification.

View Poster (PDF)